Download & Install

Hardware requirements

Warp is built to perform all calculations on GPUs using Nvidia’s CUDA platform. Thus, your system needs to have at least one recent Nvidia GPU with at least 8 GB memory, although 11+ GB will make things easier. Consumer-grade GeForce models are just as fine as expensive Quadros and Teslas. The minimum architecture is Maxwell. This comprehensive table will tell you what’s Maxwell or better.

If you bought a Gatan K3 camera with the GPU option, you already have all the Windows hardware you will likely need to process data from that camera on-the-fly! The K3 server includes a powerful Quadro P6000 with 24 GB of memory. It can handle 3 parallel processes (see instructions below) to achieve up to 300 40-frame non-superresolution counting movies per hour. We have now switched all of our K3 pre-processing to the K3 server.

Before that, we had the following configuration:

AMD Threadripper with 16 cores

128 GB RAM

4x GeForce 1080 Ti (Warp 1.0.0 was quite slow)

256 GB SSD

10 Gbit Ethernet

4K Display (UI claustrophobia sets in below 2560×1440)

The current version can handle 300+ TIFF movies (K2 counting, 40 frames) per hour on a single GeForce 1080 Ti GPU. This is likely faster than most facilities can generate data. If you need more speed, adding more GPUs to the system will increase the performance almost linearly, i. e. 2x GPUs = almost 2x speed.

Software prerequisites

Windows 7 or later (10 if you want to see what the progress bar mouse eats! 🐭🍓)

.NET Framework 4.7 or later

Visual C++ 2017 Redistributable

Latest GPU driver from Nvidia

Amazon cloud

If you don’t want to invest in your own hardware just yet, you can use (or test) Warp on an AWS instance thanks to Michael Cianfrocco. Here is Michael’s user guide.

Download

Warp 1.0.9 installer (what’s new?)

Warp nightly build 2020-11-04 (copy and replace files in 1.0.9 installation directory)

BoxNet training data

BoxNet models (select latest)

Installation

Make sure all prerequisites are installed, and execute Warp’s installer. By default, the installer will suggest the current user’s program directory. If you’d like to make Warp available to other users (although running it under a single account is probably more convenient), please make sure the installation directory can be written to without administrator privileges. Otherwise, application settings and re-trained BoxNet models cannot be saved.

Download the latest BoxNet models. There are two prefixes: BoxNet2 and BoxNet2Mask – please refer to the BoxNet user guide page to find out what they mean. If you’d like to be able to re-train BoxNet models using the central data set in addition to your data, download the training data as well. Extract the models into [Warp directory]/boxnet2models, and the training data into [Warp directory]/boxnet2data.

If you’re updating, just install the new version over the previous one – no need to uninstall first. All settings and BoxNet models will be preserved.

That’s it! Enjoy Warp 😘

Setting the number of processes per GPU

As of 1.0.7, Warp can run multiple processes on each GPU. If a GPU has enough memory to accommodate multiple movies in parallel, setting the number of processes to 2–3 will likely result in better resource utilization.

To set this, start Warp 1.0.7+ at least once and close it. Open the global.settings file in the installation directory with a text editor. Find the following line:

<Param Name="ProcessesPerDevice" Value="1" />

Set the value to the desired number, and save the file. Upon the next start, Warp will use this number of processes per GPU. If you experience crashes during on-the-fly processing, you may need to decrease the number of processes.

Compute environment

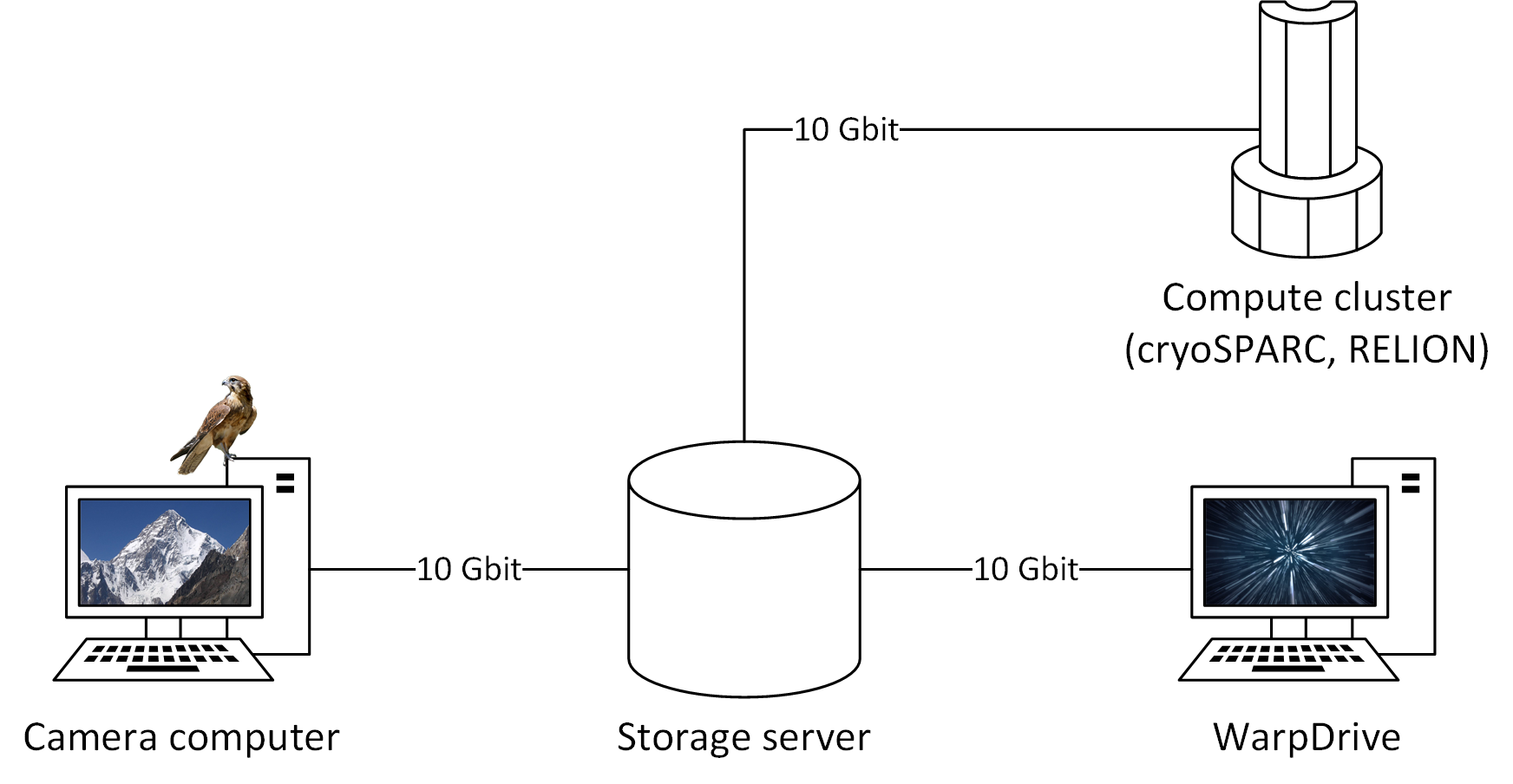

For full on-the-fly processing, Warp needs to be able to write to a file storage your cluster can access. For 90+ movies per hour, fast network connections are highly recommended. Our own network looks like this:

Change log

- Warp: EER support

- Warp: Defect map support (see quick start for how to use)

- Warp: Fixed crash after denoiser retraining (but it might still crash if you don’t have much GPU memory)

- Mmm! 🤤

- Improved performance

- Linear performance scaling on multi-GPU systems

- Lower memory consumption

- RESTful API to enable better integration in pipelines

- Easy preparation of training examples for tomogram denoising from the UI

- Improved navigation

- Stability fixes

- Updated BoxNet model

- Tilt series import from IMOD

- Major changes in the handling of TIFF files, see details

- File export required for RELION’s Bayesian particle polishing

- Experimental GUI for multi-particle refinement of tilt series, M

- Volume denoising in a separate command line tool

- Command line tool for generating pretty angular distribution plots from RELION’s output

- CUDA 10 – this will likely require a GPU driver update

- Micrograph denoising, making even very low-defocus particles visible.

- Faster generation of goodparticles_*.star files in on-the-fly mode.

- Average |CTF| plot in Overview tab to help you optimize the defocus range.

- During particle export, the weights for dose weighting are now re-normalized to sum up to 1.

- Better crash diagnostics.

- More flexible handling of gain references: In addition to the absolute path, the reference’s unique SHA1 hash is stored in each item’s processing settings. If you relocate or rename the gain reference later, items won’t be marked as outdated as long as the reference’s content stays the same.

- Flip and transpose operations for the gain reference image available in the UI.

- DM4 format support.

- More nagging about submitting BoxNet training data to the central repository.

- Faster processing: Running the full pipeline, you can now process 200+ movies per hour on a single GeForce 1080 GPU.

- Initial release.